Jae Hyun Lim

Member of Technical Staff @ Microsoft AI

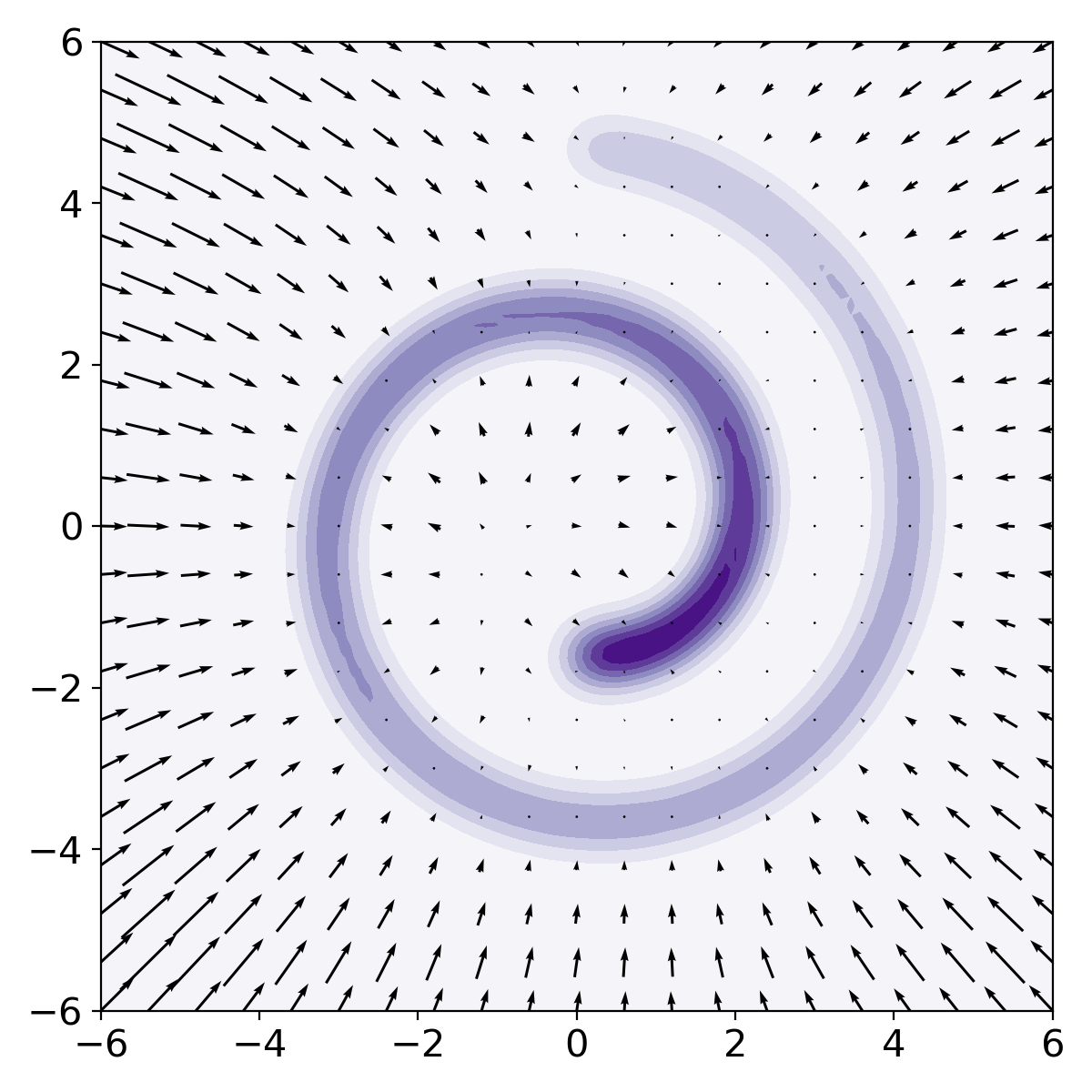

I am a Member of Technical Staff at Microsoft AI (London office). My research during graduate studies centered on deep generative modeling–particularly diffusion- and flow-based approaches–as well as approximate inference and deep reinforcement learning. I completed my Ph.D. in Computer Science at Mila, Université de Montréal, advised by Chris Pal. Before that, I received a B.S. in Bio and Brain Engineering and an M.S. in Electrical Engineering from KAIST.

email | google scholar | github | linkedin | xnews

| Dec 1, 2025 | I’ve started my new role at Microsoft AI! |

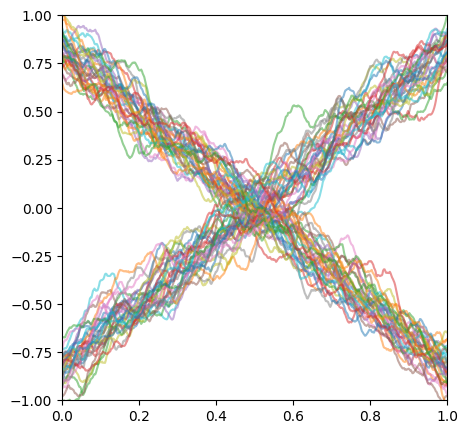

| Sep 8, 2025 | Our paper Score-Based Diffusion Models in Function Space is published in JMLR! |

| Oct 15, 2021 | I’m selected to receive a NeurIPS 2021 Outstanding Reviewer Award (the top 8% of reviewers)! |

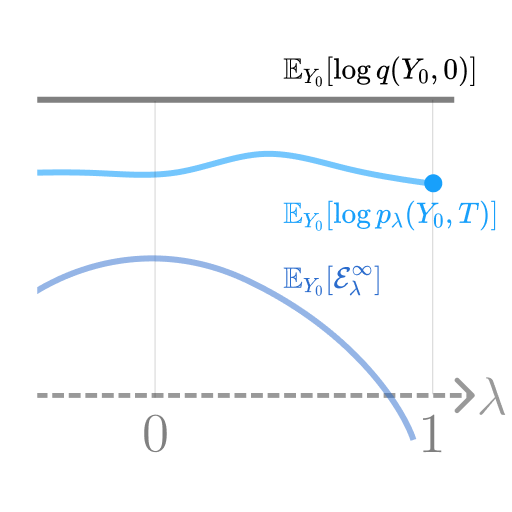

| Sep 29, 2021 | Our paper A Variational Perspective on Diffusion-Based Generative Models and Score Matching is accepted to NeurIPS 2021 and selected as a spotlight! |

| Jan 1, 2021 | Our paper Bijective-Contrastive Estimation is accepted to AABI symposium 2021! (selected for a contributed talk) |

projects

-

Neural Multisensory Scene Inference In Advances in Neural Information Processing Systems 2019 [project page] [code] [pdf]